Have you ever worried about the costs of using ChatGPT for your projects? Or perhaps you work in a field with strict data governance rules, making it difficult to use cloud-based AI solutions?

If so, running Large Language Models (LLMs) locally could be the answer you’ve been looking for.

Local LLMs offer a cost-effective and secure alternative to cloud-based options. By running models on your own hardware, you can avoid the recurring costs of API calls and keep your sensitive data within your own infrastructure. This is particularly beneficial in industries like healthcare, finance, and legal, where data privacy is paramount.

Experimenting and tinkering with LLMs on your local machine can also be a fantastic learning opportunity, deepening your understanding of AI and its applications.

What is a local LLM?

A local LLM is simply a large language model that runs locally, on your computer, eliminating the need to send your data to a cloud provider. This means you can harness the power of an LLM while maintaining full control over your sensitive information, ensuring privacy and security.

By running an LLM locally, you have the freedom to experiment, customize, and fine-tune the model to your specific needs without external dependencies. You can choose from a wide range of open-source models, tailor them to your specific tasks, and even experiment with different configurations to optimize performance.

While there might be upfront costs for suitable hardware, you can avoid the recurring expenses associated with API calls, potentially leading to significant savings in the long run. This makes local LLMs a more cost-effective solution, especially for high-volume usage.

Can I run LLM locally?

So, you’re probably wondering, “Can I actually run an LLM on my local workstation?”. The good news is that you likely can do so if you have a relatively modern laptop or desktop! However, some hardware considerations can significantly impact the speed of prompt answering and overall performance.

Let’s look at 3 components you’ll need for experimenting with local LLMs.

Hardware requirements

While not strictly necessary, having a PC or laptop with a dedicated graphics card is highly recommended. This will significantly improve the performance of LLMs, as they can leverage the GPU for faster computations. Without a dedicated GPU, LLMs might run quite slowly, making them impractical for real-world use.

LLMs can be quite resource-intensive, so it’s essential to have enough RAM and storage space to accommodate them. The exact requirements will vary depending on the specific LLM you choose, but having at least 16GB of RAM and a decent amount of free disk space is a good starting point.

Software requirements

Besides the hardware, you also need the right software to effectively run and manage LLMs locally. This software generally falls into three categories:

- Servers: these run and manage LLMs in the background, handling tasks like loading models, processing requests, and generating responses. They provide the essential infrastructure for your LLMs. Some examples are Ollama and Lalamafile.

- User interfaces: these provide a visual way to interact with your LLMs. They allow you to input prompts, view generated text, and potentially customize the model’s behavior. User interfaces make it easier to experiment with LLMs. Some examples are OpenWebUI and LobeChat.

- Full-stack solutions: these are all-in-one tools that combine the server and the user interface components. They handle everything from model management to processing and provide a built-in visual interface for interacting with the LLMs. They are particularly suitable for users who prefer a simplified setup. Some examples are GPT4All and Jan.

Open source LLMs

Last, but not least, you need the LLMs themselves. These are the large language models that will process your prompts and generate text. There are many different LLMs available, each with its own strengths and weaknesses. Some are better at generating creative text formats, while others are suited for writing code.

Where can you download the LLMs from? One popular source for open-source LLMs is Hugging Face. They have a large repository of models that you can download and use for free. Here are some of the most popular LLMs to get started with:

- Llama 3: The latest iteration in Meta AI’s Llama series, known for its strong performance across various natural language processing tasks. It’s a versatile model suitable for a wide range of applications.

- Mistral 7b: A relatively lightweight and efficient model, designed to perform well even on consumer-grade hardware. It’s a good option for those who want to experiment with LLMs without needing access to powerful servers.

- LLaVA: An innovative large multimodal model that excels at understanding both images and text, making it ideal for tasks like image captioning, visual question answering, and building interactive multimodal dialogue systems.

When choosing an LLM, it’s important to consider the task you want it to perform, as well as the hardware requirements and the size of the model. Some LLMs can be quite large, so you’ll need to make sure you have enough storage space to accommodate them.

💡

GitHub – n8n-io/self-hosted-ai-starter-kit: The Self-hosted AI Starter Kit is an open-source template that quickly sets up a local AI environment. Curated by n8n, it provides essential tools for creating secure, self-hosted AI workflows.

The Self-hosted AI Starter Kit is an open-source template that quickly sets up a local AI environment. Curated by n8n, it provides essential tools for creating secure, self-hosted AI workflows. – n…

5 best ways to run LLMs locally

Let’s explore 5 of the most popular software options available for running LLMs locally. These tools offer a range of features and capabilities to help you get started with local LLMs.

Ollama (+ OpenWebUI)

Use case: Ollama is ideal for quickly trying out different open-source LLMs, especially for users comfortable with the command line. It’s also the go-to tool for homelab and self-hosting enthusiasts who can use Ollama as an AI backend for various applications.

Ollama is a command-line tool that simplifies the process of downloading and running LLMs locally. It has a simple set of commands for managing models, making it easy to get started.

While Ollama itself is primarily a command-line tool, you can enhance its usability by pairing it with OpenWebUI, which provides a graphical interface for interacting with your LLMs.

Pros:

- Simple and easy to use

- Supports a wide range of open-source models

- Runs on most hardware and major operating systems

Cons:

- Primarily command-line based (without OpenWebUI), which may not be suitable for all users.

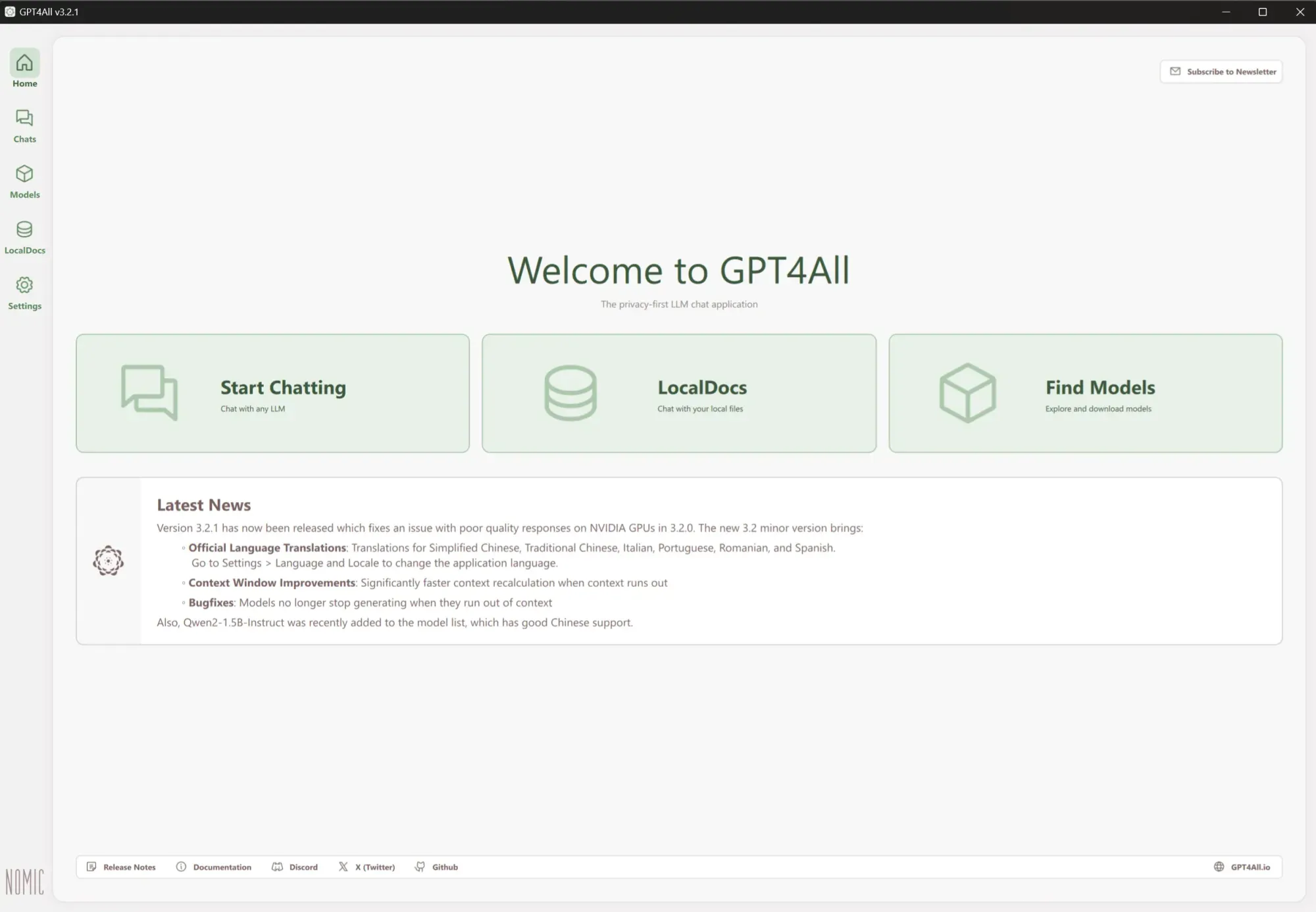

GPT4All

Use case: General-purpose AI applications including chatbots, content generation, and more. The ability to chat with your own PDF files as context makes it a valuable tool for knowledge extraction and research.

GPT4All is designed to be user-friendly, offering a chat-based interface that makes it easy to interact with the LLMs. It has out-of-the-box support for “LocalDocs”, a feature allowing you to chat privately and locally with your documents.

Pros:

- Intuitive chat-based interface

- Runs on most hardware and major operating systems

- Open-source and community-driven

- Enterprise edition available

Cons:

- May not be as feature-rich as some other options, lacking in areas such as model customization and fine-tuning.

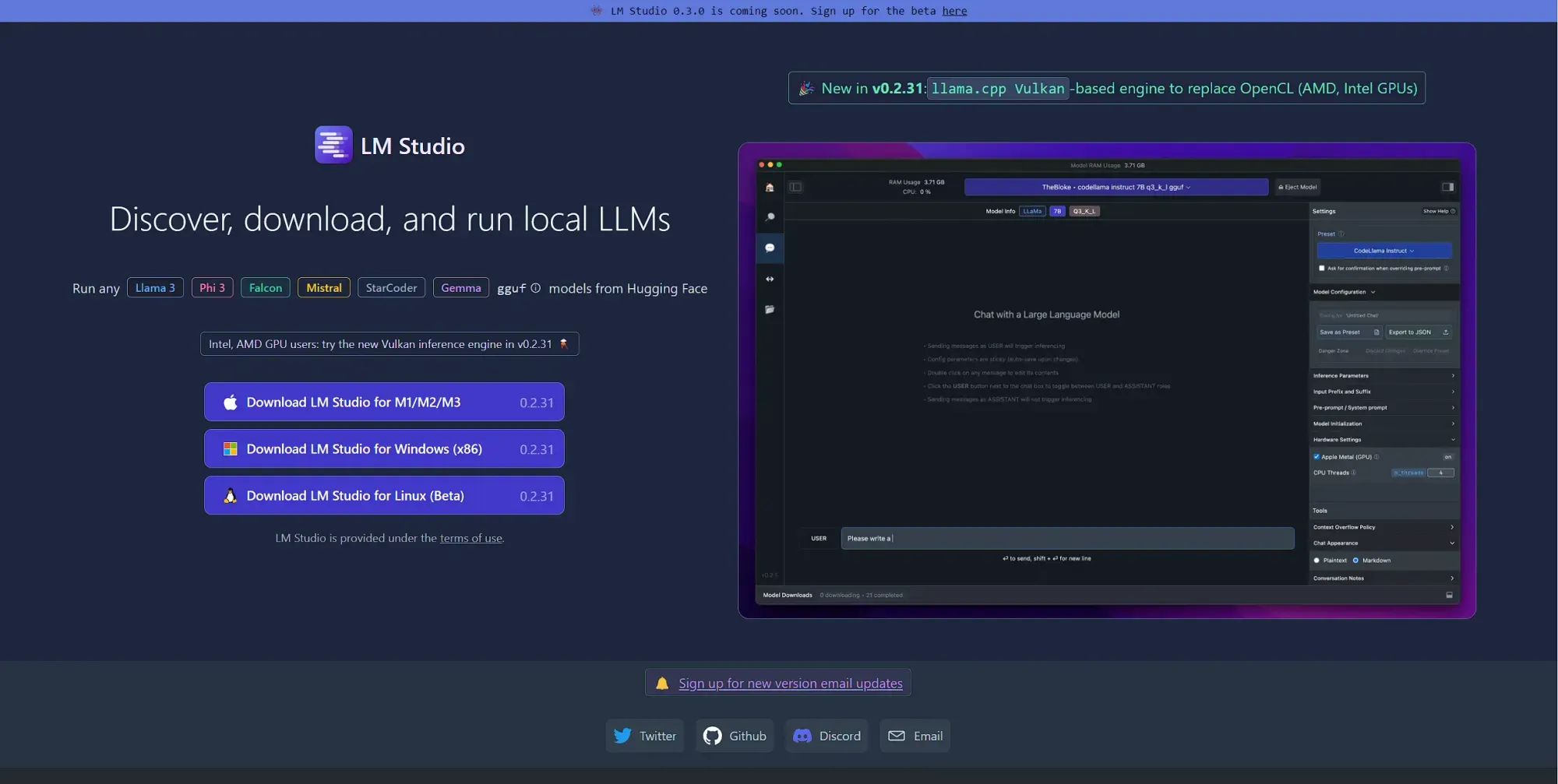

LM Studio

Use case: Excellent for customizing and fine-tuning LLMs for specific tasks, making it a favorite among researchers and developers seeking granular control over their AI solutions.

LM Studio is a platform designed to make it easy to run and experiment with LLMs locally. It offers a range of tools for customizing and fine-tuning your LLMs, allowing you to optimize their performance for specific tasks.

Pros:

- Model customization options

- Ability to fine-tune LLMs

- Track and compare the performance of different models and configurations to identify the best approach for your use case.

- Runs on most hardware and major operating systems

Cons:

- Steeper learning curve compared to other tools

- Fine-tuning and experimenting with LLMs can demand significant computational resources.

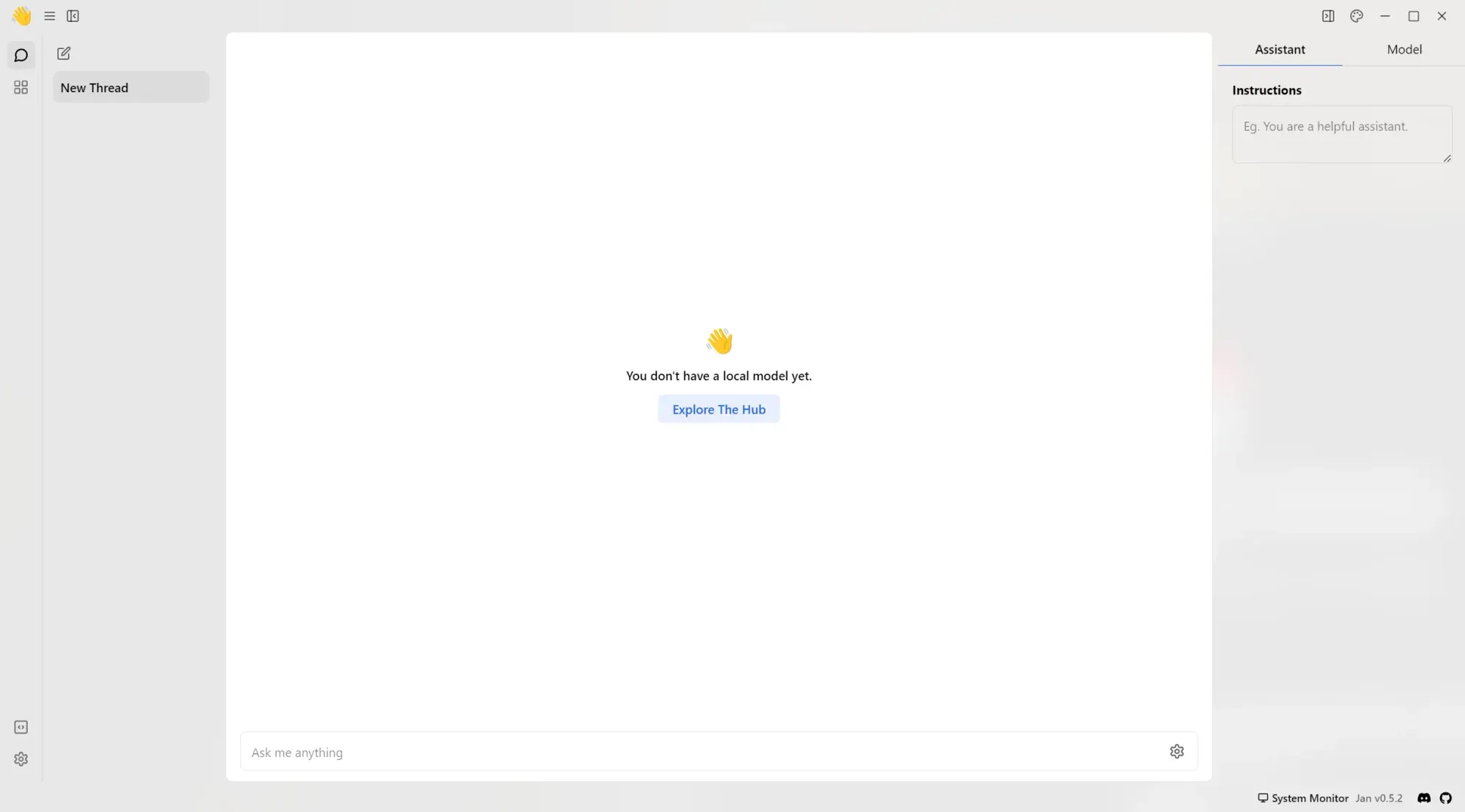

Jan

Use case: Can be used to interact with both local and remote (cloud-based) LLMs.

Jan is another noteworthy option for running LLMs locally. It places a strong emphasis on privacy and security.

One of Jan’s unique features is its flexibility in terms of server options. While it offers its own local server, Jan can also integrate with Ollama and LM Studio, utilizing them as remote servers. This is particularly useful when you want to use Jan as a client and have LLMs running on a more powerful server.

Pros:

- Strong focus on privacy and security

- Flexible server options, including integration with Ollama and LM Studio

- Jan offers a user-friendly experience, even for those new to running LLMs locally

Cons:

- While compatible with most hardware, support for AMD GPUs is still in development.

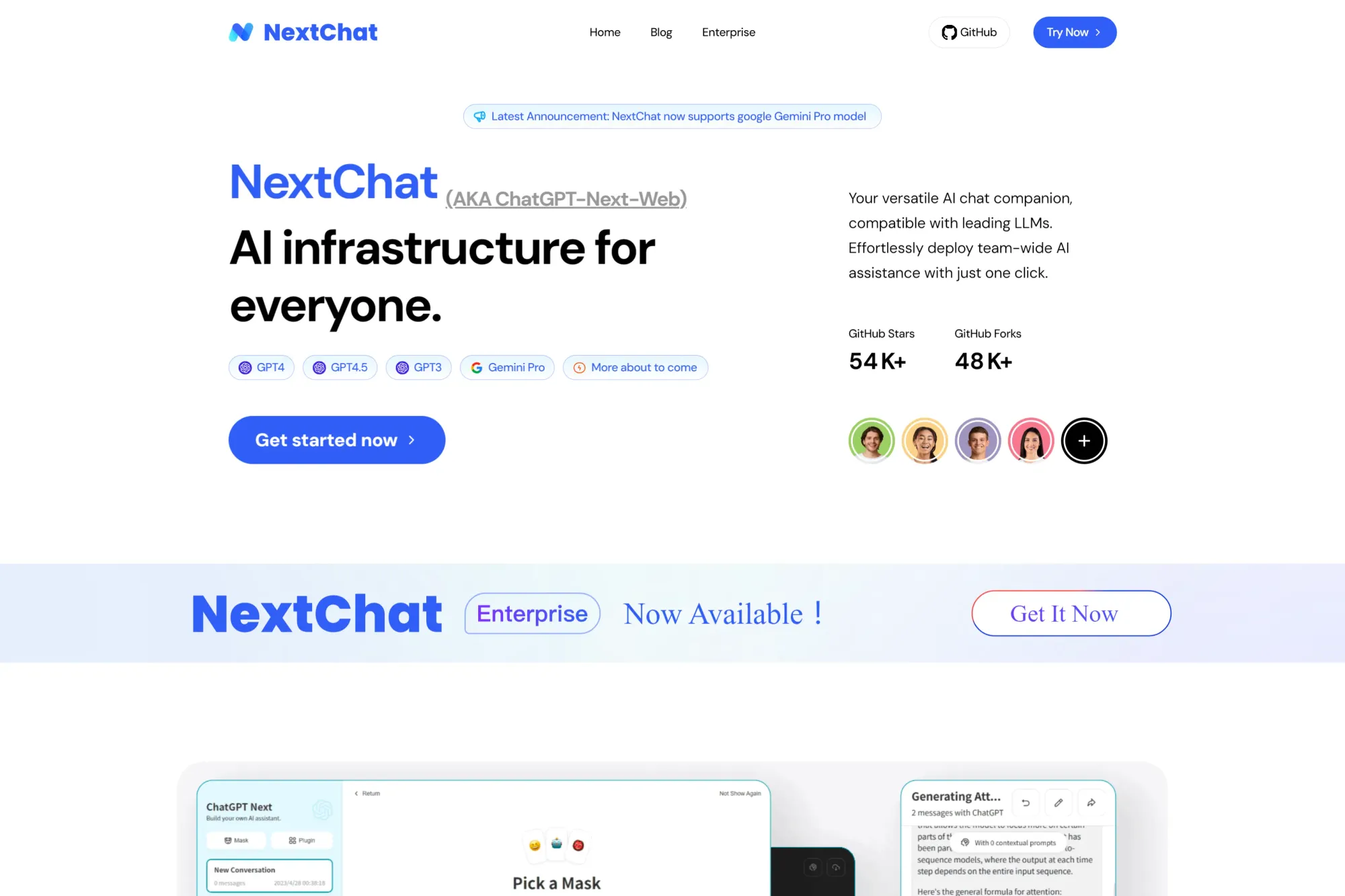

NextChat

Use case: Can be used to build and deploy conversational AI experiences like chatbots and virtual assistants with compatibility for both open-source and closed-source LLMs.

NextChat is a versatile platform designed for building and deploying conversational AI experiences. Unlike the other options on this list, which primarily focus on running open-source LLMs locally, NextChat excels at integrating with closed-source models like ChatGPT and Google Gemini.

Pros:

- Compatibility with a wide range of LLMs, including closed-source models

- Robust tools for building and deploying conversational AI experiences

- Enterprise-focused features and integrations

Cons:

- May be overkill for simple local LLM experimentation

- Geared towards more complex conversational AI applications.

How to run a local LLM with n8n?

To run a local Large Language Model (LLM) with n8n, you can use the Self-Hosted AI Starter Kit, designed by n8n to simplify the process of setting up AI on your own hardware.

This kit includes a Docker Compose template that bundles n8n with top-tier local AI tools like Ollama and Qdrant. It provides an easy installation process, AI workflow templates, and customizable networking configurations, enabling you to quickly deploy and manage AI workflows locally, ensuring full control over your data and infrastructure.

n8n uses LangChain to simplify the development of complex interactions with LLMs such as chaining multiple prompts together, implementing decision making and interacting with external data sources. The low-code approach that n8n uses, fits perfectly with the modular nature of LangChain, allowing users to assemble and customize LLM workflows without extensive coding.

Now, let’s also explore a quick local LLM workflow!

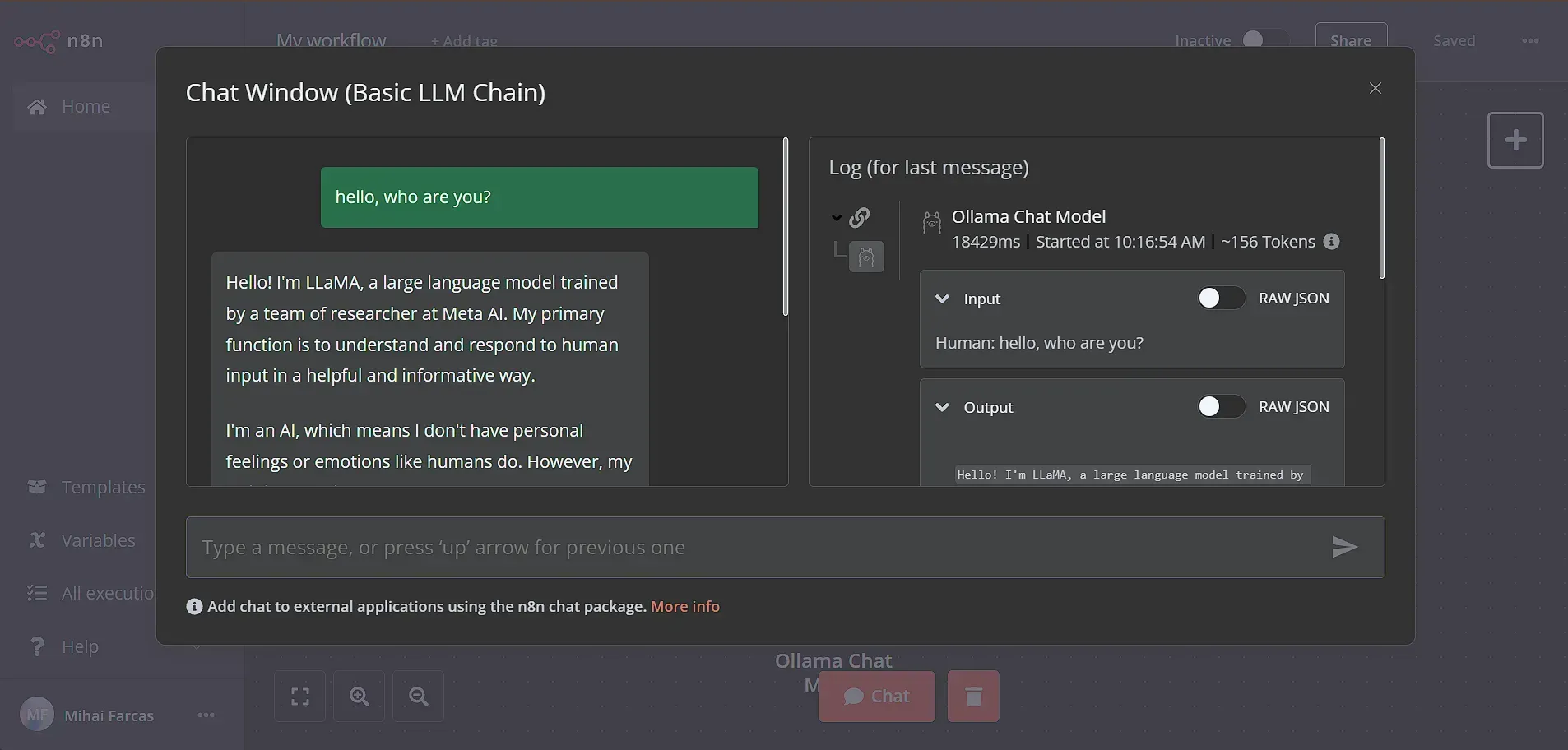

With this n8n workflow, you can easily chat with your self-hosted Large Language Models (LLMs) through a simple, user-friendly interface. By hooking up to Ollama, a handy tool for managing local LLMs, you can send prompts and get AI-generated responses right within n8n:

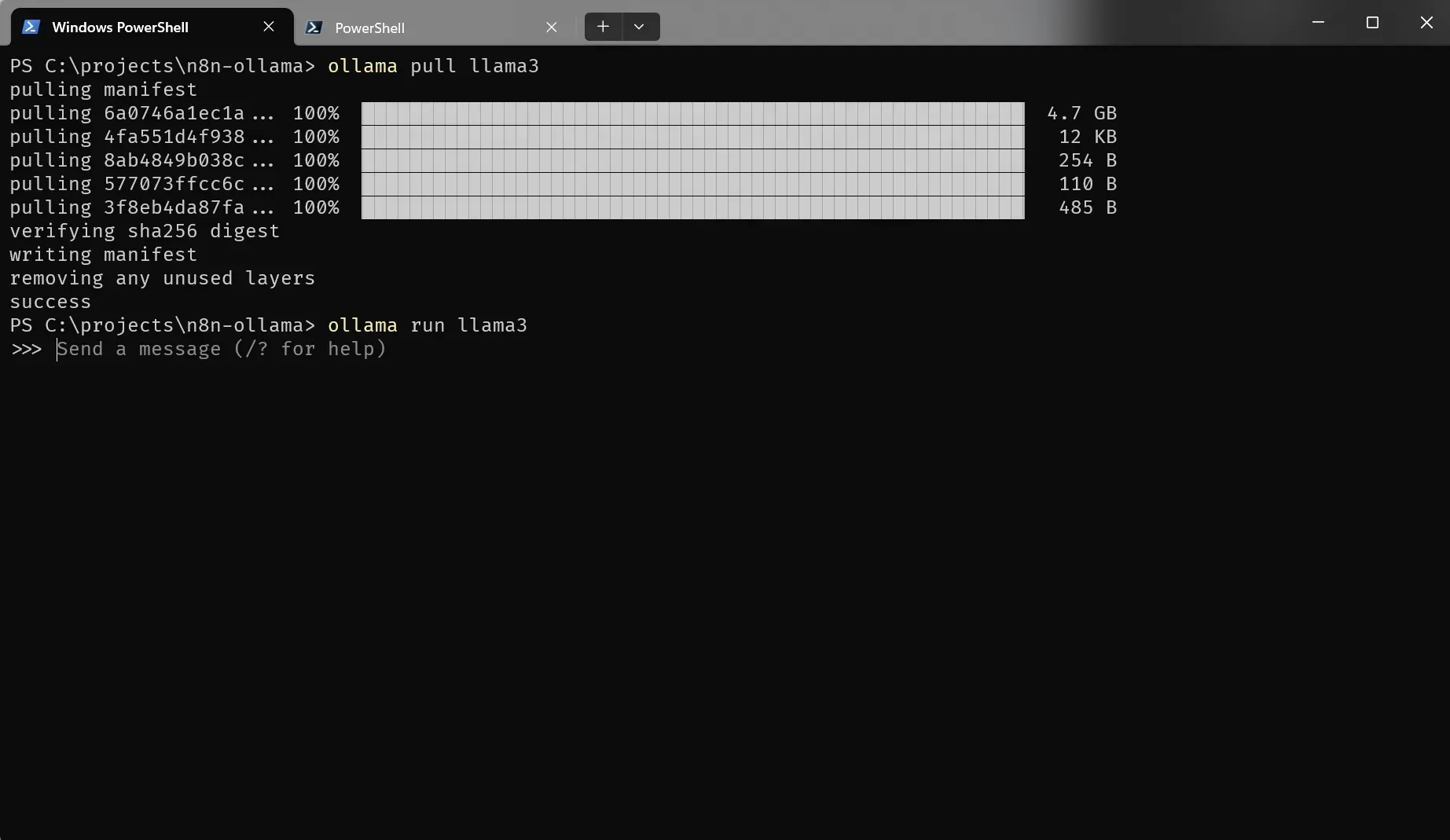

Step 1: Install Ollama and run a model

Installing Ollama is straightforward, just download the Ollama installer for your operating system. You can install Ollama on Windows, Mac or Linux.

After you’ve installed Ollama, you can pull a model such as Llama3, with the ollama pull llama3 command:

Depending on the model, the download can take some time. This version of Llama3, for example, is 4.7 Gb.After the download is complete, run ollama run llama3 and you can start chatting with the model right from the command line!

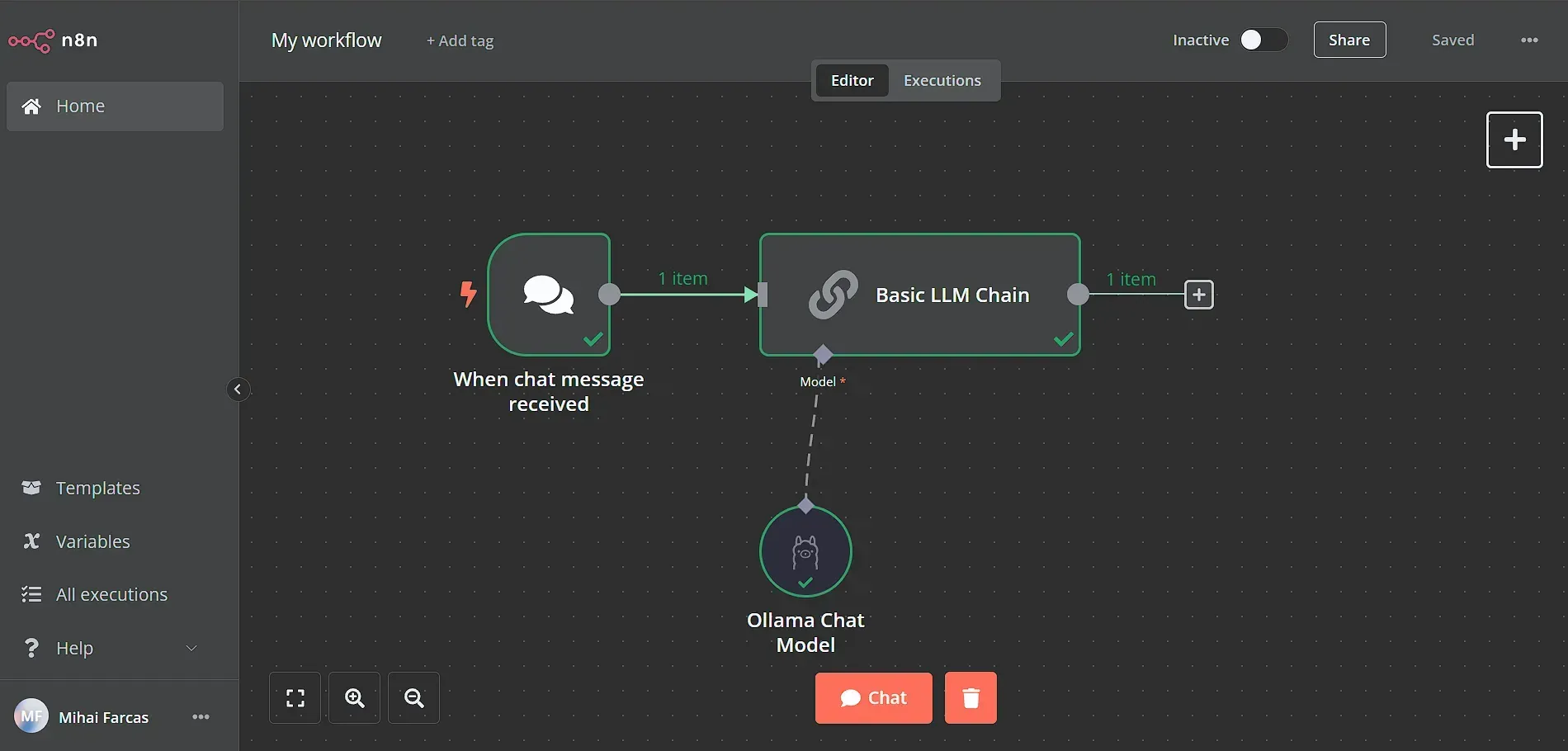

Step 2: Set up a chat workflow

Let’s now set up a simple n8n workflow that uses your local LLM running with Ollama. Here is a sneak peek of the workflow we will build:

Start by adding a Chat trigger node, which is the workflow starting point for building chat interfaces with n8n. Then we need to connect the chat trigger to a Basic LLM Chain where we will set the prompt and configure the LLM to use.

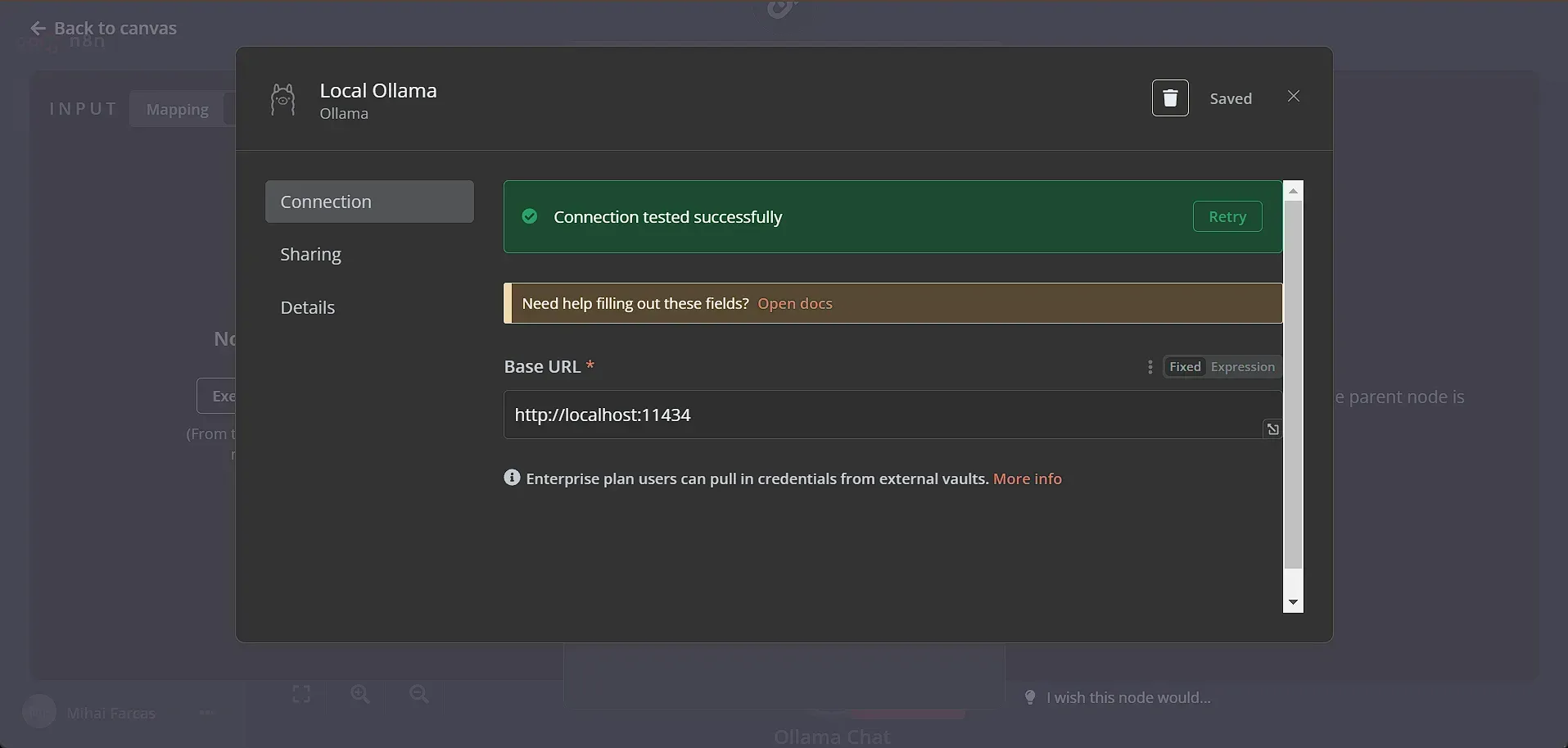

Step 3: Connect n8n with Ollama

Connecting Ollama with n8n couldn’t be easier thanks to the Ollama Model sub-node! Ollama is a background process running on your computer and exposes an API on port 11434. You can check if the Ollama API is running by opening a browser window and accessing http://localhost :11434, and you should see a message saying “Ollama is running”.

For n8n to be able to communicate with Ollama’s API via localhost, both applications need to be on the same network. If you are running n8n in Docker, you would need to start the Docker container with the `–network=host` parameter. That way the n8n container can access any port on the host’s machine.

To set a connection between n8n and Ollama, we simply leave everything as default in the Ollama connection window:

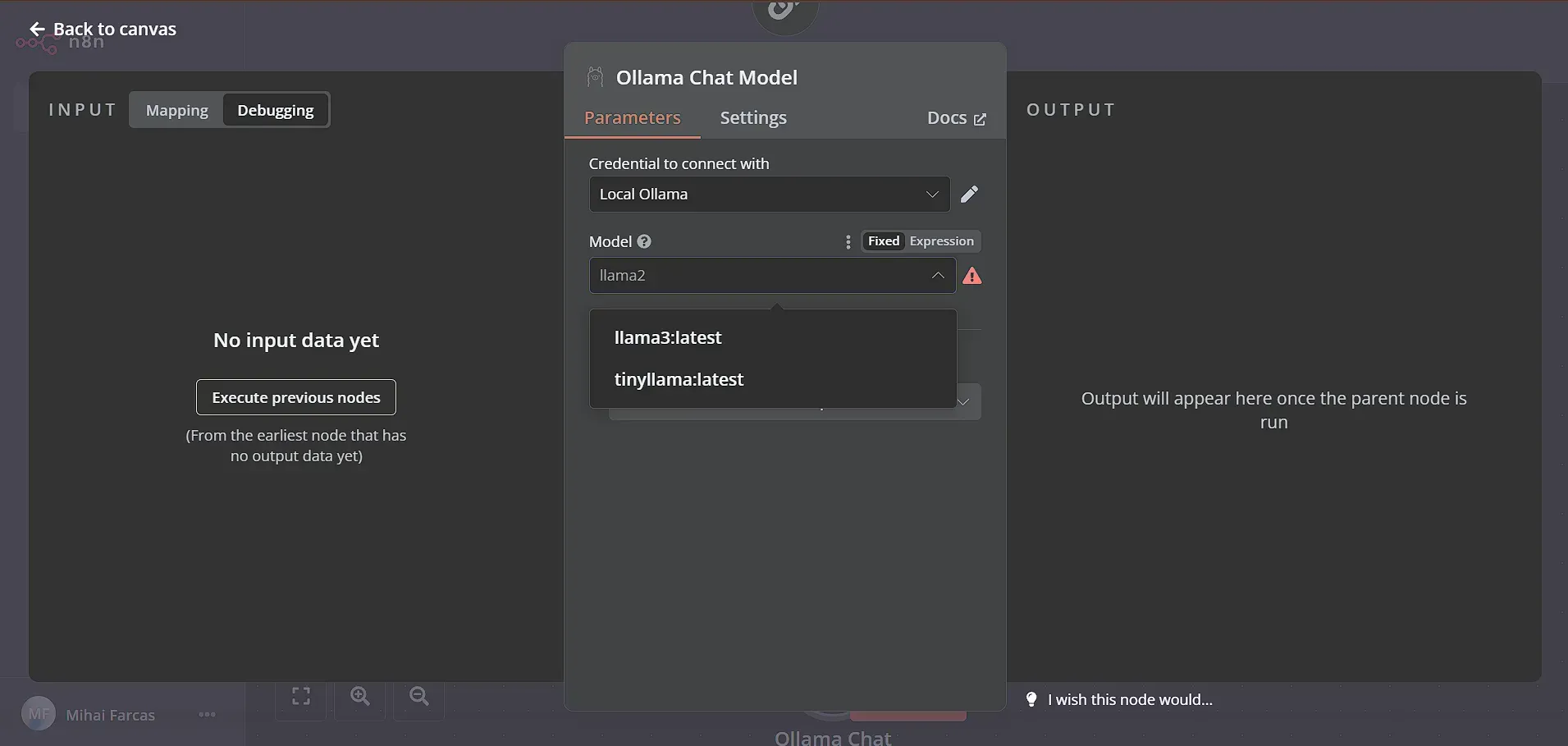

After the connection to the Ollama API is successful, in the Model dropdown you should not see all the models you’ve downloaded. Just pick the llama3:latest model we’ve downloaded earlier.

Step 4: Chat with Llama3

Next, let’s chat with our local LLM! Click the Chat button on the bottom of the workflow page to test it out. Type any message and your local LLM should respond. It’s that easy!

Wrap up

Running LLMs locally is not only doable but also practical for those who prioritize privacy, cost savings, or want a deeper understanding of AI.

Thanks to tools like Ollama, which make it easier to run LLMs on consumer hardware, and platforms like n8n, which help you build AI-powered applications, using LLMs on your own computer is now simpler than ever!

What’s next?

If you also want to explore more AI workflows, not necessary the local LLM one, take a look at this selection: